New Offering Delivers a Unique Fusion Architecture That’s Being Leveraged by Industry-Leading AI Pioneers Like Cohere, CoreWeave, and NVIDIA to Deliver Breakthrough Performance Gains and Reduce Infrastructure Requirements For Massive AI Training and Inference Workloads

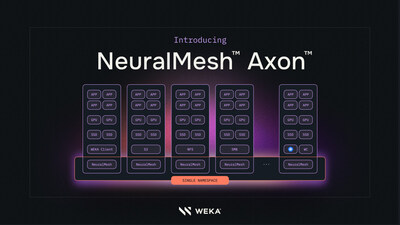

PARIS and CAMPBELL, Calif., July 8, 2025 /PRNewswire/ — From RAISE SUMMIT 2025: WEKA unveiled NeuralMesh Axon, a breakthrough storage system that leverages an innovative fusion architecture designed to address the fundamental challenges of running exascale AI applications and workloads. NeuralMesh Axon seamlessly fuses with GPU servers and AI factories to streamline deployments, reduce costs, and significantly enhance AI workload responsiveness and performance, transforming underutilized GPU resources into a unified, high-performance infrastructure layer.

Building on the company’s recently announced NeuralMesh storage system, the new offering enhances its containerized microservices architecture with powerful embedded functionality, enabling AI pioneers, AI cloud and neocloud service providers to accelerate AI model development at extreme scale, particularly when combined with NVIDIA AI Enterprise software stacks for advanced model training and inference optimization. NeuralMesh Axon also supports real-time reasoning, with significantly improved time-to-first-token and overall token throughput, enabling customers to bring innovations to market faster.

AI Infrastructure Obstacles Compound at Exascale

Performance is make-or-break for large language model (LLM) training and inference workloads, especially when running at extreme scale. Organizations that run massive AI workloads on traditional storage architectures, which rely on replication-heavy approaches, waste NVMe capacity, face significant inefficiencies, and struggle with unpredictable performance and resource allocation.

The reason? Traditional architectures weren’t designed to process and store massive volumes of data in real-time. They create latency and bottlenecks in data pipelines and AI workflows that can cripple exascale AI deployments. Underutilized GPU servers and outdated data architectures turn premium hardware into idle capital, resulting in costly downtime for training workloads. Inference workloads struggle with memory-bound barriers, including key-value (KV) caches and hot data, resulting in reduced throughput and increased infrastructure strain. Limited KV cache offload capacity creates data access bottlenecks and complicates resource allocation for incoming prompts, directly impacting operational expenses and time-to-insight. Many organizations are transitioning to NVIDIA accelerated compute servers, paired with NVIDIA AI Enterprise software, to address these challenges. However, without modern storage integration, they still encounter significant limitations in pipeline efficiency and overall GPU utilization.

Built For The World’s Largest and Most Demanding Accelerated Compute Environments

To address these challenges, NeuralMesh Axon’s high-performance, resilient storage fabric fuses directly into accelerated compute servers by leveraging local NVMe, spare CPU cores, and its existing network infrastructure. This unified, software-defined compute and storage layer delivers consistent microsecond latency for both local and remote workloads—outpacing traditional local protocols like NFS.

Additionally, when leveraging WEKA’s Augmented Memory Grid capability, it can provide near-memory speeds for KV cache loads at massive scale. Unlike replication-heavy approaches that squander aggregate capacity and collapse under failures, NeuralMesh Axon’s unique erasure coding design tolerates up to four simultaneous node losses, sustains full throughput during rebuilds, and enables predefined resource allocation across the existing NVMe, CPU cores, and networking resources—transforming isolated disks into a memory-like storage pool at exascale and beyond while providing consistent low latency access to all addressable data.

Cloud service providers and AI innovators operating at exascale require infrastructure solutions that can match the exponential growth in model complexity and dataset sizes. NeuralMesh Axon is specifically designed for organizations operating at the forefront of AI innovation that require immediate, extreme-scale performance rather than gradual scaling over time. This includes AI cloud providers and neoclouds building AI services, regional AI factories, major cloud providers developing AI solutions for enterprise customers, and large enterprise organizations deploying the most demanding AI inference and training solutions that must agilely scale and optimize their AI infrastructure investments to support rapid innovation cycles.

Delivering Game-Changing Performance for Accelerated AI Innovation

Early adopters, including Cohere, the industry’s leading security-first enterprise AI company, are already seeing transformational results.

Cohere is among WEKA’s first customers to deploy NeuralMesh Axon to power its AI model training and inference workloads. Faced with high innovation costs, data transfer bottlenecks, and underutilized GPUs, Cohere first deployed NeuralMesh Axon in the public cloud to unify its AI stack and streamline operations.

“For AI model builders, speed, GPU optimization, and cost-efficiency are mission-critical. That means using less hardware, generating more tokens, and running more models—without waiting on capacity or migrating data,” said Autumn Moulder, vice president of engineering at Cohere. “Embedding WEKA’s NeuralMesh Axon into our GPU servers enabled us to maximize utilization and accelerate every step of our AI pipelines. The performance gains have been game-changing: Inference deployments that used to take five minutes can occur in 15 seconds, with 10 times faster checkpointing. Our team can now iterate on and bring revolutionary new AI models, like North, to market with unprecedented speed.”

To improve training and help develop North, Cohere’s secure AI agents platform, the company is deploying WEKA’s NeuralMesh Axon on CoreWeave Cloud, creating a robust foundation to support real-time reasoning and deliver exceptional experiences for Cohere’s end customers.

“We’re entering an era where AI advancement transcends raw compute alone—it’s unleashed by intelligent infrastructure design. CoreWeave is redefining what’s possible for AI pioneers by eliminating the complexities that constrain AI at scale,” said Peter Salanki, CTO and co-founder at CoreWeave. “With WEKA’s NeuralMesh Axon seamlessly integrated into CoreWeave’s AI cloud infrastructure, we’re bringing processing power directly to data, achieving microsecond latencies that reduce I/O wait time and deliver more than 30 GB/s read, 12 GB/s write, and 1 million IOPS to an individual GPU server. This breakthrough approach increases GPU utilization and empowers Cohere with the performance foundation they need to shatter inference speed barriers and deliver advanced AI solutions to their customers.”

“AI factories are defining the future of AI infrastructure built on NVIDIA accelerated compute and our ecosystem of NVIDIA Cloud Partners,” said Marc Hamilton, vice president of solutions architecture and engineering at NVIDIA. “By optimizing inference at scale and embedding ultra-low latency NVMe storage close to the GPUs, organizations can unlock more bandwidth and extend the available on-GPU memory for any capacity. Partner solutions like WEKA’s NeuralMesh Axon deployed with CoreWeave provide a critical foundation for accelerated inferencing while enabling next-generation AI services with exceptional performance and cost efficiency.”

The Benefits of Fusing Storage and Compute For AI Innovation

NeuralMesh Axon delivers immediate, measurable improvements for AI builders and cloud service providers operating at exascale, including:

- Expanded Memory With Accelerated Token Throughput: Provides tight integration with WEKA’s Augmented Memory Grid technology, extending GPU memory by leveraging it as a token warehouse. This has delivered a 20x improvement in time to first token performance across multiple customer deployments, enabling larger context windows and significantly improved token processing efficiency for inference-intensive workloads. Furthermore, NeuralMesh Axon enables customers to dynamically adjust compute and storage resources and seamlessly supports just-in-time training and just-in-time inference.

- Huge GPU Acceleration and Efficiency Gains: Customers are achieving dramatic performance and GPU utilization improvements with NeuralMesh Axon, with AI model training workloads exceeding 90%—a three-fold improvement over the industry average. NeuralMesh Axon also reduces the required rack space, power, and cooling requirements in on-premises data centers, helping to lower infrastructure costs and complexity by leveraging existing server resources.

- Immediate Scale for Massive AI Workflows: Designed for AI innovators who need immediate extreme scale, rather than to grow over time. NeuralMesh Axon’s containerized microservices architecture and cloud-native design enable organizations to scale storage performance and capacity independently while maintaining consistent performance characteristics across hybrid and multicloud environments.

- Enables Teams to Focus on Building AI, Not Infrastructure: Runs seamlessly across hybrid and cloud environments, integrating with existing Kubernetes and container environments to eliminate the need for external storage infrastructure and reduce complexity.

“The infrastructure challenges of exascale AI are unlike anything the industry has faced before. At WEKA, we’re seeing organizations struggle with low GPU utilization during training and GPU overload during inference, while AI costs spiral into millions per model and agent,” said Ajay Singh, chief product officer at WEKA. “That’s why we engineered NeuralMesh Axon, born from our deep focus on optimizing every layer of AI infrastructure from the GPU up. Now, AI-first organizations can achieve the performance and cost efficiency required for competitive AI innovation when running at exascale and beyond.”

Availability

NeuralMesh Axon is currently available in limited release for large-scale enterprise AI and neocloud customers, with general availability scheduled for fall 2025. For more information, visit:

- Product Page: https://www.weka.io/product/neuralmesh-axon/

- Solution Brief: https://www.weka.io/resources/solution-brief/weka-neuralmesh-axon-solution-brief

- Blog Post: https://www.weka.io/blog/ai-ml/neuralmesh-axon-reinvents-ai-infrastructure-economics-for-the-largest-workloads/

About WEKA

WEKA is transforming how organizations build, run, and scale AI workflows through NeuralMesh™, its intelligent, adaptive mesh storage system. Unlike traditional data infrastructure, which becomes more fragile as AI environments expand, NeuralMesh becomes faster, stronger, and more efficient as it scales, growing with your AI environment to provide a flexible foundation for enterprise and agentic AI innovation. Trusted by 30% of the Fortune 50 and the world’s leading neoclouds and AI innovators, NeuralMesh maximizes GPU utilization, accelerates time to first token, and lowers the cost of AI innovation. Learn more at www.weka.io, or connect with us on LinkedIn and X.

WEKA and the W logo are registered trademarks of WekaIO, Inc. Other trade names herein may be trademarks of their respective owners.

Photo – https://businesspresswire.com/wp-content/uploads/sites/19/2025/07/weka-debuts-neuralmesh-axon-for-exascale-ai-deployments.jpg

Logo – https://businesspresswire.com/wp-content/uploads/sites/19/2025/07/weka-debuts-neuralmesh-axon-for-exascale-ai-deployments-1.jpg

![]() View original content:https://www.prnewswire.co.uk/news-releases/weka-debuts-neuralmesh-axon-for-exascale-ai-deployments-302499571.html

View original content:https://www.prnewswire.co.uk/news-releases/weka-debuts-neuralmesh-axon-for-exascale-ai-deployments-302499571.html